🔹【New Paper】The Principle of Predictable Intervention: A Universal Constraint on Actionable Intelligence

📅 Published on July 11, 2025

📌 Read the full paper on Zenodo

✍️ Author: Xuewu Liu

🧠 Abstract

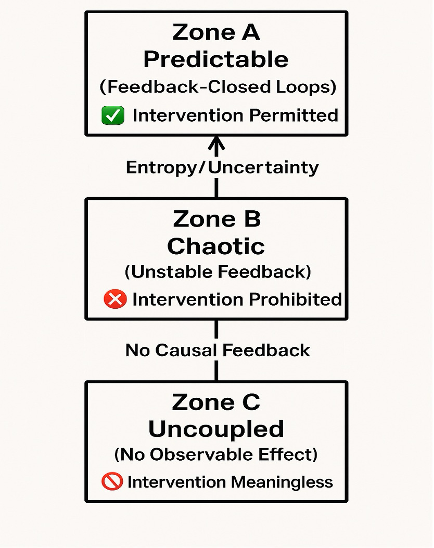

This paper introduces the Principle of Predictable Intervention (PPI), a proposed universal constraint on intelligent action within complex systems. Unlike traditional definitions of intelligence grounded in epistemology or computation, PPI focuses on the boundary of where intelligent interventions remain sustainable and meaningful.

The core claim is simple but powerful:

“No system can tolerate sustained interventions at layers where outcomes become unpredictable relative to inputs.”

This principle applies across disciplines—artificial intelligence, medicine, governance, and the philosophy of mind—and offers a new lens to examine the limitations of decision-making, autonomy, and agency in real-world systems.

📐 Why This Principle Matters

Most modern systems—from neural networks to healthcare institutions—operate under the assumption that more control, more data, and more precision equate to better outcomes. But what if this belief is structurally flawed?

PPI reframes the problem: intelligence must be constrained to the predictable.

Where feedback breaks down—either due to chaos or decoupling—intelligence itself loses coherence.

🔍 Key Applications

AI Safety: Why models that optimize without bounded predictability can collapse into catastrophic loops.

Medicine: Why over-intervention in biological systems (like cancer treatment) often backfires when predictability breaks down.

Governance: Why centralized policies may fail in chaotic systems and local predictability must be preserved.

Mind and Consciousness: Why free will may require feedback-stable boundaries to be physically meaningful.

📎 Citation

Liu, X. (2025). The Principle of Predictable Intervention: A Universal Constraint on Actionable Intelligence in Complex Systems. Zenodo. https://doi.org/10.5281/zenodo.15861785

💬 Final Thoughts

This work is not a conclusion, but a structural foundation for a more rigorous understanding of how—and where—intelligent action can be meaningfully applied.

If you work in AI research, complex systems, theoretical medicine, or philosophy of mind, I invite you to read the full text and consider its implications in your own field.

Feedback, critique, or collaboration ideas are welcome.

✉️ You can reach me directly at: xuewu.liu@cdsxcancer.com